Quantitative content analysis with GPT-4

Everyone is talking about GPT-4, and for good reason. The generative capabilities are amazing, generating impressive and coherent text.

On the other hand, I've been playing with the tool as a way to do traditional NLP tasks really quickly and with no training or examples (aka "zero shot learning"). This makes natural language tasks for small experiments and analysis fun and easy.

Zero-shot learning

Zero-shot learning refers to the ability of GPT-4 to perform tasks without any prior examples or training data. This feature is particularly useful for small-scale projects and rapid experimentation, as it eliminates the need to gather extensive training data. With its vast knowledge base, GPT-4 can quickly understand the task at hand and generate relevant outputs.

For instance, GPT-4 can be utilized for sentiment analysis, summarization, and even question-answering systems without any additional training. This makes it an ideal tool for businesses seeking to quickly analyze customer feedback, researchers aiming to extract insights from large text datasets, or educators looking to evaluate student responses. GPT-4's zero-shot learning capability can be leveraged for tasks like text classification, entity recognition, and even text-to-speech synthesis. Interestingly, it does a pretty good job of providing numerical outputs.

An example: Emotion Similarity

For example, here's a prompt to try out:

Generate a list of the most common 12 emotions. Then generate a correlation matrix. Each value in the matrix is a number to 1 decimal point, from -1.0 (perfectly negative correlation) to 1.0 (perfectly positive correlation). Eg. a score of 0.7 indicates fairly high positive correlation. The correlation matrix should be in CSV format.

The output:

Here's a list of 12 common emotions:

Happiness

Sadness

Anger

Fear

Surprise

Disgust

Love

Jealousy

Envy

Pride

Shame

Guilt

It continues:

Below is a correlation matrix in CSV format. Please note that the correlations are not based on scientific research or data, but are generated as an example for illustrative purposes:

and now the matrix:

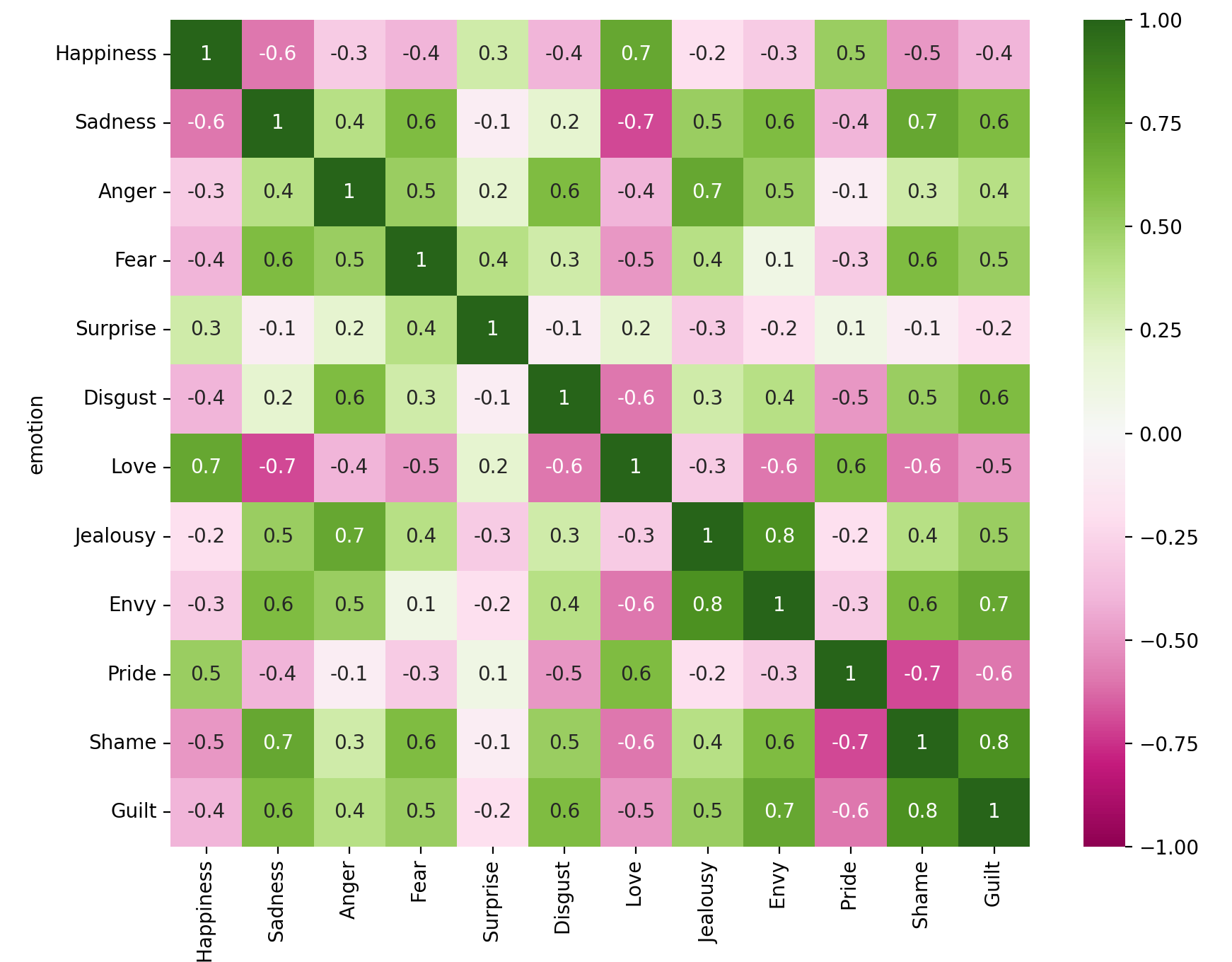

, Happiness, Sadness, Anger, Fear, Surprise, Disgust, Love, Jealousy, Envy, Pride, Shame, Guilt

Happiness, 1.0, -0.6, -0.3, -0.4, 0.3, -0.4, 0.7, -0.2, -0.3, 0.5, -0.5, -0.4

Sadness, -0.6, 1.0, 0.4, 0.6, -0.1, 0.2, -0.7, 0.5, 0.6, -0.4, 0.7, 0.6

Anger, -0.3, 0.4, 1.0, 0.5, 0.2, 0.6, -0.4, 0.7, 0.5, -0.1, 0.3, 0.4

Fear, -0.4, 0.6, 0.5, 1.0, 0.4, 0.3, -0.5, 0.4, 0.1, -0.3, 0.6, 0.5

Surprise, 0.3, -0.1, 0.2, 0.4, 1.0, -0.1, 0.2, -0.3, -0.2, 0.1, -0.1, -0.2

Disgust, -0.4, 0.2, 0.6, 0.3, -0.1, 1.0, -0.6, 0.3, 0.4, -0.5, 0.5, 0.6

Love, 0.7, -0.7, -0.4, -0.5, 0.2, -0.6, 1.0, -0.3, -0.6, 0.6, -0.6, -0.5

Jealousy, -0.2, 0.5, 0.7, 0.4, -0.3, 0.3, -0.3, 1.0, 0.8, -0.2, 0.4, 0.5

Envy, -0.3, 0.6, 0.5, 0.1, -0.2, 0.4, -0.6, 0.8, 1.0, -0.3, 0.6, 0.7

Pride, 0.5, -0.4, -0.1, -0.3, 0.1, -0.5, 0.6, -0.2, -0.3, 1.0, -0.7, -0.6

Shame

Notice it gets cut off, you can prod it to continue:

Continue where your output was truncated from your last messageThen the final csv looks something like this, which we'll save as emotions.csv

, Happiness, Sadness, Anger, Fear, Surprise, Disgust, Love, Jealousy, Envy, Pride, Shame, Guilt

Happiness, 1.0, -0.6, -0.3, -0.4, 0.3, -0.4, 0.7, -0.2, -0.3, 0.5, -0.5, -0.4

Sadness, -0.6, 1.0, 0.4, 0.6, -0.1, 0.2, -0.7, 0.5, 0.6, -0.4, 0.7, 0.6

Anger, -0.3, 0.4, 1.0, 0.5, 0.2, 0.6, -0.4, 0.7, 0.5, -0.1, 0.3, 0.4

Fear, -0.4, 0.6, 0.5, 1.0, 0.4, 0.3, -0.5, 0.4, 0.1, -0.3, 0.6, 0.5

Surprise, 0.3, -0.1, 0.2, 0.4, 1.0, -0.1, 0.2, -0.3, -0.2, 0.1, -0.1, -0.2

Disgust, -0.4, 0.2, 0.6, 0.3, -0.1, 1.0, -0.6, 0.3, 0.4, -0.5, 0.5, 0.6

Love, 0.7, -0.7, -0.4, -0.5, 0.2, -0.6, 1.0, -0.3, -0.6, 0.6, -0.6, -0.5

Jealousy, -0.2, 0.5, 0.7, 0.4, -0.3, 0.3, -0.3, 1.0, 0.8, -0.2, 0.4, 0.5

Envy, -0.3, 0.6, 0.5, 0.1, -0.2, 0.4, -0.6, 0.8, 1.0, -0.3, 0.6, 0.7

Pride, 0.5, -0.4, -0.1, -0.3, 0.1, -0.5, 0.6, -0.2, -0.3, 1.0, -0.7, -0.6

Shame, -0.5, 0.7, 0.3, 0.6, -0.1, 0.5, -0.6, 0.4, 0.6, -0.7, 1.0, 0.8

Guilt, -0.4, 0.6, 0.4, 0.5, -0.2, 0.6, -0.5, 0.5, 0.7, -0.6, 0.8, 1.0

Let's visualize this, hopping into ipython or a jupyter notebook:

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

%matplotlib

df = pd.read_csv('emotions.csv')

df=df.rename(columns={'Unnamed: 0':'emotion'}).set_index('emotion')

plt.figure(figsize=(12,6))

heatmap = sns.heatmap(df, vmin=-1, vmax=1, annot=True, cmap='PiYG')You'll get something like this

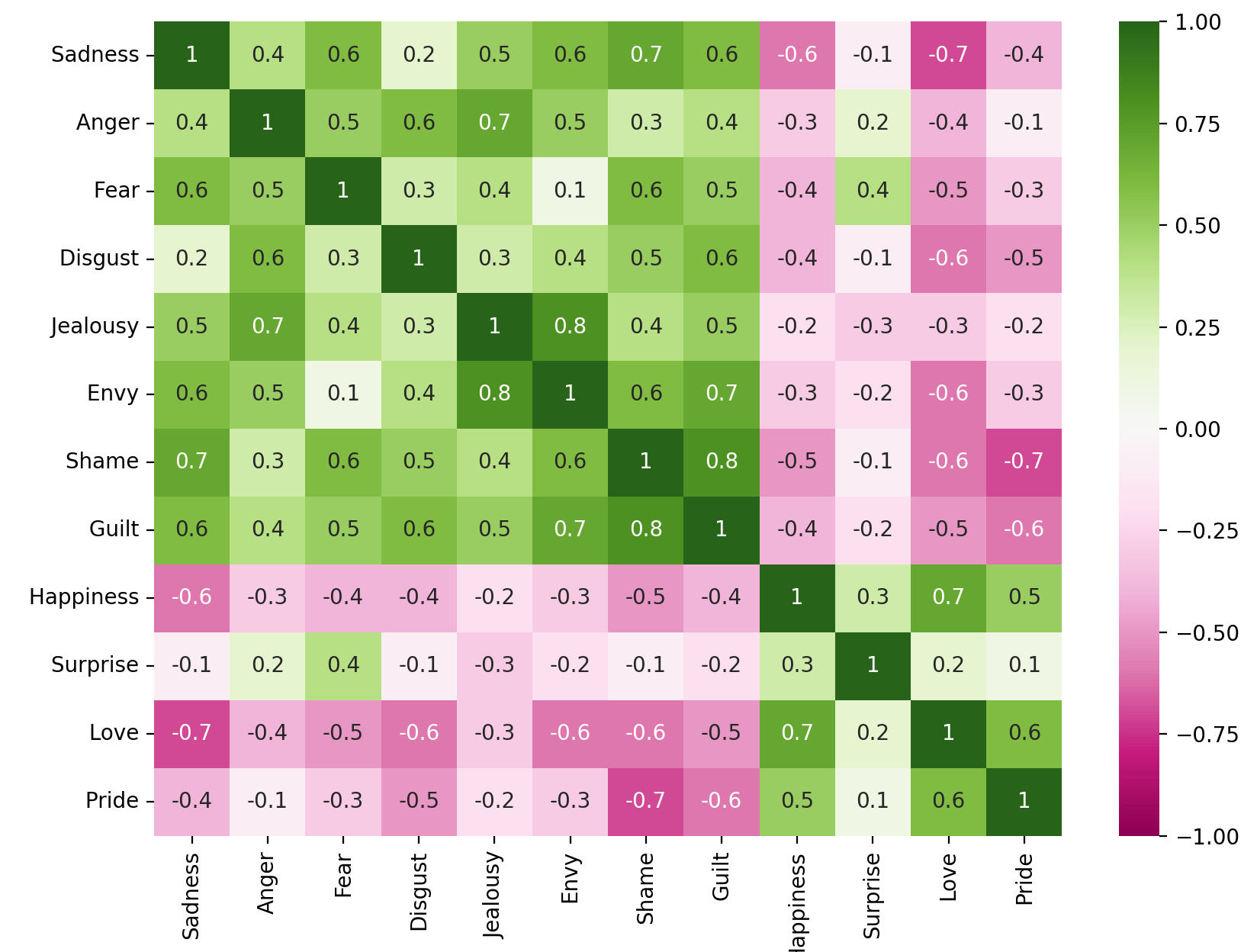

Now let's use this cluster_corr() function to group together similar emotions into clusters and the quality of the similarity scores really jumps out:

heatmap = sns.heatmap(cluster_corr(df), vmin=-1, vmax=1, annot=True,cmap="PiYG")

All the negative emotions are correctly grouped together, then the more positive-valence emotions Happiness, Love, Pride as well. It nails it with the close similarity between Shame and Guilt, and Envy and Jealous, for example. "Surprise" is the oddball of the group, which makes sense: it can be positively or negatively experienced (positively surprised by a birthday party with your best friends, or negatively by a sudden layoff from your tech job)

Try it out with your own list of emotions, another category of things, and I'm sure you'll be surprised at the result. You're leaning on the symbolic relationships learned by the LLM, plus its ability to generate output of a certain style on command. Much easier than assembling your own corpus of emotion-tagged text to build your own model from scratch!

Cleaning up the AI's mess

The inherent randomness means sometimes weird things will happen, like a row of data getting an extra column, or a JSON object not formatted correctly. So some validation of output and rerunning as needed is always necessary.

Also, there's a ton to be done around using a LangChain-like approach to retrieve and prompt the model with relevant documents: think chat messages, academic research papers, internal wiki pages, blog posts, you name it. Many AI startups are addressing some piece of this, but if you want to get started, getting these type of infra dialed is 100% critical and not exactly easy.

We're working on some light infrastructure to help leverage ChatGPT within your org more easily. If you're considering AI-related tasks and these topics are of interest, feel free to reach out to kyle@kaleidoscopedata.com